The Gorilla in the Room: Breaking Free of Innovation Blindness

- owenwhite

- Dec 1, 2024

- 6 min read

Innovation promises breakthroughs, transformative ideas, and progress. Yet too often, our efforts to innovate fail—not because of a lack of talent or resources, but because of how we think. Three thinking traps are particularly relevant to innovation: either/or thinking, means-ends reasoning, and the tendency to mistake complex problems—where control of cause and effect is limited—for problems that can be solved with technical expertise and best practices. Together, these traps are the silent saboteurs of creative breakthroughs.

To break free, we need to rethink not just how we solve problems, but how we see them in the first place. Let’s explore.

The Gorilla in the X-Ray

In the early 2000s, cognitive scientists conducted an experiment with experienced radiologists, asking them to scan X-rays for abnormalities. Unbeknownst to the radiologists, the researchers had inserted an image of a cartoonish gorilla—about the size of a matchbox—into one of the scans.

The results were stunning: 83% of the radiologists failed to see the gorilla entirely. Their expertise had narrowed their focus, making them blind to the unexpected. This phenomenon, known as “inattentional blindness,” highlights a profound truth: we see what we expect to see, not what’s actually there. And we don’t see what’s there unless we are expecting to see it.

The implications of this research are significant, particularly for innovation. Humans are so focused on known patterns, familiar solutions, or binary choices that we fail to notice opportunities hiding in plain sight. Take Lyme disease as an example. For years, patients with chronic symptoms were dismissed because doctors framed the issue as either “real” or “psychosomatic.” This binary thinking blinded them to a more nuanced explanation involving bacterial infections, immune system responses, and environmental factors. It was only through persistent advocacy by patients and the gradual accumulation of clinical evidence that the medical community began to recognize and address the complexities of chronic Lyme disease.

The lesson? We need to be vigilant not to lock ourselves into cognitive traps that make innovation—and insight—impossible.

The Trap of Either/Or Thinking

One of the most common traps in problem-solving is either/or thinking. It offers a false clarity by reducing choices to a binary: this or that, left or right, cheaper or better. But most problems—and opportunities—don’t fit neatly into such binaries.

Consider the classic elevator problem, a favorite example of behavioral economist Rory Sutherland. Imagine tenants in a busy office building complaining about how the lifts are unacceptably slow. Traditional problem-solving frames the issue in technical terms with a choice between two options: either fix the lifts or replace them. Both solutions are technical, expensive, and time-consuming.

But are these the only options? A creative thinker reframed the problem by asking a question: is the real issue just about slow lifts, or is it about the experience of waiting for them? His proposed solution? Install mirrors in the lobby. Suddenly, tenants were too busy checking their reflections to notice the wait. Complaints disappeared overnight—not because the elevators were faster, but because the problem had been reimagined.

Reframing breaks the constraints of binary thinking. It forces us to step back and question the very way we’ve defined the problem. When we reframe, we move from an A-or-B choice to a broader spectrum of options. The power of reframing lies in expanding the possibilities: by shifting the perspective, entirely new solutions emerge.

This is the essential point: binary thinking narrows our vision, locking us into predictable, limited answers. Real innovation comes when we break out of that trap and learn to see differently.

The Limits of Means-Ends Thinking

If either/or thinking narrows how we frame problems, means-ends thinking limits how we act on them. This approach—defining a goal and creating a path to achieve it—is the bedrock of modern rationality and underpins most of management science. It promises control: set the right goal, create a detailed plan, and you can achieve the outcome you desire.

This model of thinking originates from manufacturing and engineering, where processes are built to deliver consistent, predictable results. It works brilliantly in simple contexts, where cause and effect are clear and repeatable. For instance, baking a cake: follow the recipe, and the outcome is assured.

It also works in complicated contexts, where expertise is required, but the relationship between cause and effect remains discernible. Building a Ferrari engine is a good example. It’s not simple, but with the right tools, expertise, and analysis, the problem can be broken into parts, solved, and reassembled. The whole is the sum of its parts.

But this approach falters when applied to problems that don’t behave like machines. Human systems—businesses, cultures, markets—are not machines. They are dynamic, contextual, and shaped by unpredictable interactions. This makes them fundamentally different from the tightly controlled systems of manufacturing.

Consider a company culture. Leaders often approach cultural transformation as if it were an engineering problem: define the desired culture, design a rollout plan, and execute it. But culture is not a machine. It is a living system, shaped by countless human interactions. A well-crafted plan can be undone by a single offhand comment in a meeting, triggering a cascade of unintended consequences.

This is why Dave Snowden, a pioneer of complexity science, argues that many problems we encounter in business and society exist in complex environments, where cause and effect are not clear-cut and outcomes are not guaranteed. Unlike a factory, where inputs and processes lead predictably to outputs, human systems are shaped by relationships, perceptions, and contexts that defy standardization.

This distinction is particularly important in the context of “wicked problems.” As Watkins and Wilber explain in Wicked and Wise, these problems are characterized by multiple, interdependent causes, no single technical solution, and the involvement of many stakeholders with differing perspectives and priorities. Wicked problems evolve over time, with new symptoms or challenges arising as others are addressed. Solutions in one area often create unintended consequences in another.

For example, a plan to build more affordable housing might face resistance from communities worried about property values or overcrowding, while subsidies for clean energy might inadvertently raise energy prices for low-income households. Wicked problems demand a way of thinking that is fundamentally different from means-ends reasoning. They require approaches that balance technical, social, and psychological change.

The Cynefin Framework: Navigating Complex Systems

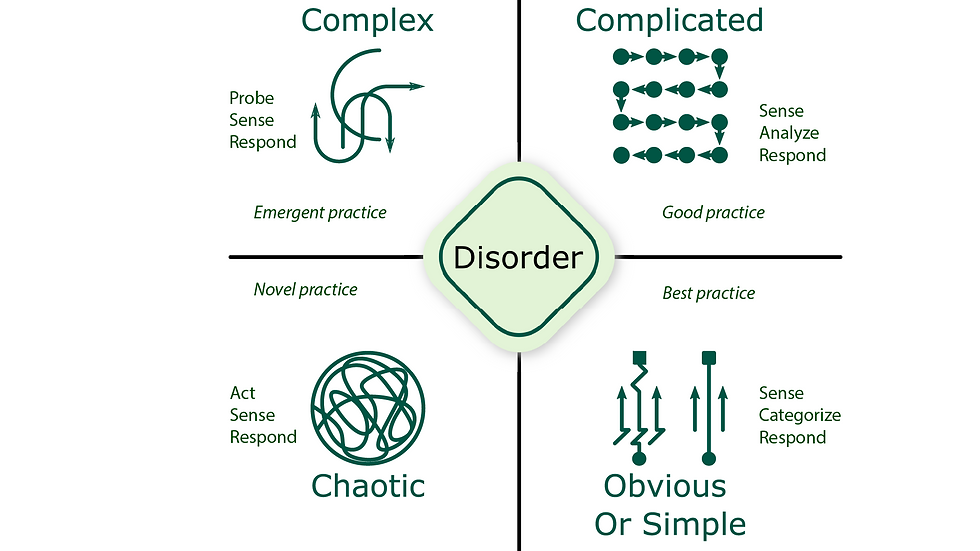

Dave Snowden’s Cynefin framework offers a powerful way to understand why certain approaches fail in complex environments. Cynefin (pronounced “Kinevin”) divides problems into four domains, each with its own logic and approach:

• Obvious (Simple) Problems: These are predictable and repeatable. The relationship between cause and effect is clear and obvious, like following a recipe. The correct response is to Sense, Categorize, and Respond. Best practices work well in this domain because of its stability. However, if over-rigid, this domain can collapse into chaos when exceptions arise.

• Complicated Problems: In this domain, cause and effect are not obvious but can be discerned with expertise. Fixing a Ferrari engine or diagnosing a medical condition are examples. The approach here is to Sense, Analyze, and Respond, relying on expert input and good practices to create reliable outcomes.

• Complex Problems: These systems defy linear relationships between cause and effect. Cause and effect can only be understood in hindsight, and outcomes emerge through interaction and feedback. Examples include cultural transformation, innovation, or navigating volatile markets. The approach is to Probe, Sense, and Respond through experimentation. Safe-to-fail experiments help reveal what works without risking everything.

• Chaotic Problems: In chaotic situations, there is no discernible cause-and-effect relationship. Immediate action is required to stabilize the situation. The correct approach is to Act, Sense, and Respond, prioritizing novel practices to regain control.

The mistake we often make is treating complex problems as though they were simple or complicated. We bring an engineering mindset to challenges that resist control and predictability. Human systems, unlike machines, are shaped by emotion, context, and emergent behaviors. Success in complexity comes not from rigid plans but from iterative learning, collaboration, and adaptation.

Breaking Free from the Traps

How do we escape these traps? Snowden and others suggest practical principles:

• Focus on Experiments over Planning: In complex environments, outcomes are unpredictable. Run multiple small experiments—what Snowden calls “safe-to-fail” probes. These allow you to test ideas without risking everything.

• Reframe the Problem: Shift your perspective. Ask questions that challenge assumptions and reveal new possibilities, as in the elevator example.

• Embrace Ambiguity: Success in complexity often emerges through iterative action and reflection. Be prepared to adapt and respond as the situation evolves.

• Observe Patterns: Pay close attention to the dynamics of the system. Look for patterns, connections, and feedback loops to inform your next steps.

Conclusion

At its core, innovation is not about imposing control but creating the conditions for insight to emerge. Recognizing when we are dealing with complexity—and shifting our approach accordingly—can open up new possibilities for solving the most challenging problems.

The next time you face an intractable problem, ask yourself: Are you mistaking a complex environment for a simple or complicated one? And if so, what might happen if you stopped trying to control the system—and started exploring it instead?

Comments